Recent searches

Search options

Got #DeepSeek Coder 33B running on my desktop's #AMDGPU card with #ollama.

First off, I tested its ability to generate and understand #Rust code. Unfortunately, it falls into the same confusion of the smaller 6.7B model.

https://gist.github.com/codewiz/c6bd627ec38c9bc0f615f4a32da0490e

#ollama #llm #deepseek

To be completely fair, thread safety and atomics are advanced topics.

Several humans I have interviewed for engineering positions would also have a lot of trouble answering these questions. I couldn't write this code on a whiteboard without looking at the Rust library docs.

The main problem here is that the model is making up poor excuses to justify Arc<AtomicUsize>, showing poor reasoning skills.

Larger models like #GPT4 should do better with my #Rust #coding questions (haven't tried yet).

Indeed, #GPT4o nails my Rust + thread safety questions:

https://chatgpt.com/share/2f146510-d5d6-49b6-82fb-b8443666c06b

@codewiz Damn, that is good. The way it offers the type annotated version of the example when you asked for the type of one variable is also really nice.

@penguin42 Check my last post on Codestral.

Hard to tell if it's better or worse than GPT-4o...

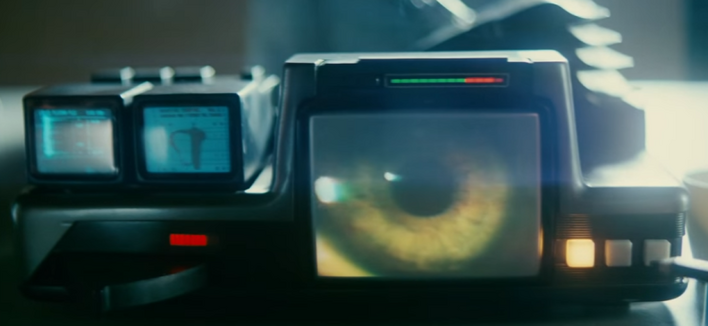

...it typically takes twenty to thirty cross-referenced questions to detect a Nexus-60B model

@codewiz Anything that can remember Rust macro syntax from memory must be a replicant....

@codewiz Now, tell me about your mother....